A Step-by-Step Guide to Why DeepSeek is So Exciting

Bassey asked me to write about DeepSeek and I was thinking about what I could add to the conversation. Not only is the tech media talking about it, the finance media I use to escape the tech media is talking about it. Tomorrow Ezra Klein is going to bring in a guest to talk about DeepSeek’s impact on young men and I will be truly cornered.

What I think I can add to all this is some context about the core innovation. This new R1 model will almost certainly be granted a place in AI folklore for its audacity and innovation. Why? And why should you care? There are many reasons, but first we need to talk about how LLMs are trained.

Part 1: Supervised Training of a Base Model

The first step to train a large language model is to compile as much text data as possible. Say, the internet, its archives, every book that’s been digitized and some stuff that you maybe shouldn’t have (e.g. the archive of Reddit, the archive of The New York Times, the archive of Twitter etc.). You ignore the legal issues, write a computer program to split the text into subwords called “tokens” and train a very large neural network model to try to predict the next token given a “context window” of previous tokens.

It’s a simple game. You read the computer a paragraph of text, stop at a random word and ask the computer to guess the word.

This approach will produce a model that can guess the next correct word with shockingly high accuracy. Since a lot of the internet involves people asking questions and explaining things, you can use this next word prediction machine to spit out an “answer” to a question by iteratively generating the next best word until the next best word is the <END OF OUTPUT> token.

OpenAI trained their GPT-3 model using this approach, and it worked great! Answered all kinds of questions. But there were some issues. Chiefly that the thing didn’t always follow the decorum of polite society. Or put more bluntly, it could be impolite and racist. It needed to go to finishing school.

Part 2: Reinforcement Learning from Human Feedback

OpenAI’s solution to its misbehaving language model was to “teach” it how to act better using human feedback.

The process is a little convoluted, but here it is at a high level:

- Train a LLM using supervised training (part 1)

- Prompt the LLM to output multiple responses to questions. For example, ask it to give you two different options for a short thank you note to your mother.

- Use humans to pick the better version of the output

- Make a copy of the LLM and train it to predict which of the original LLM’s responses are more likely to be chosen by the human. This is known as the “teacher model”.

- Now go back to asking your original LLM to give you multiple responses to your questions, but this time use your teacher model to pick which one is better and train on the good ones. (Human judgements are expensive)

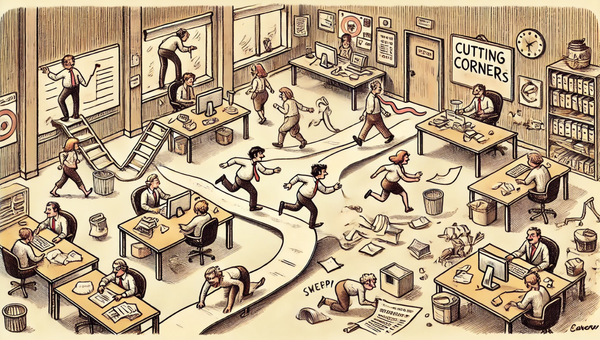

Essentially they trained a model to teach the original model to behave better. This process is (1) convoluted and (2) expensive because humans are expensive and inferencing a LLM to train another LLM is slow.

Despite these challenges, the process worked and is a key innovation behind the ChatGPT product and other LLMs-as-a-service competitors. There’s a variant of the above process called Direct Preference Optimization (DPO) that is more elegant and less convoluted, but also requires humans to give preferences.

Part 3: The Rise of Reasoning

In September 2024, OpenAI released their o1 model that could “reason” through complex problems. It blew away their previous best models on coding and math exams. On the qualifying exam of the International Mathematics Olympiad, o1 solved a staggering 83% of the problems compared to only 13% by GPT-4.

Until a week ago, it was a mystery how the o1 model was trained. People had theories, but training these things is super expensive and the companies with the resources to do it rarely have the incentives to share their intelligence with the world.

Part 4: Enter DeepSeek

Last week, a small Chinese company called DeepSeek published a paper detailing how they trained a model named R1 that is competitive with o1. They also open sourced the model, making it available to use by companies and hobbyists around the world.

The trick turned out to be a combination of two simple ideas. DeepSeek did not come up with these ideas, but they executed them at scale and told us how they did it.

The first idea is to replace the reinforcement learning with human feedback step (part 2) with a “fine tuning” process using math and coding problems. The quality of a thank you note is subjective; whether a value of ‘x’ makes the left side of an equation equal the right side is not. So goodbye humans-in-the loop and hello massive amounts of programmatically validated data.

The second idea is to use a model prompt that encourages the model to output intermediate steps before giving a final answer. The prompt they use is below.

Notice the <think></think> and <answer></answer> tags. The model will now take a stab at an answer, then double check that guess before answering for real. This prompting technique is known as “chain of thought” and has been proven to improve the accuracy of responses. Sometimes the obvious answer is not correct and we must think again. This training routine pushes the model to learn those reasoning patterns.

One fun thing about DeepSeek’s chatbot compared to ChatGPT is that it surfaces the chain of reasoning. Casey Newton thinks this is a design innovation in the so-far clunky chatbot product space. OpenAI was apparently concerned that surfacing the chain of thought reasoning would make replicating the model easier and hides the reasoning babble from users. DeepSeek has no business model to speak of and lets its model openly babble at you.

You may be wondering if the reasoning training replaces the anti-racist, pro-politeness training. DeepSeek does both, but it remains inconclusive how to best combine the two techniques. The relevant section in the paper is this:

"To further align the model with human preferences, we implement a secondary reinforcement learning stage aimed at improving the model’s helpfulness and harmlessness while simultaneously refining its reasoning capabilities."

Why does this all matter?

Lots of reasons!

- It’s a very cool technical achievement! And they open sourced the model. Buy or rent a GPU and you can have one of the world’s most advanced LLM models at your fingertips.

- DeepSeek is a Chinese company with all Chinese educated employees. There are geo-political implications to China beating the US in AI. (“This is a sputnik moment”)

- Last month, DeepSeek published a different paper explaining how they trained their base model for a fraction of the cost (read: fraction of the GPU compute) of what OpenAI spends. If some small-ish Chinese company can make a competitive model to OpenAI’s state-of-the-art model, what is OpenAI’s edge? What is the edge of any of these foundational AI companies?

- DeepSeek’s achievement questions the seemingly infinite demand predicted for Nvidia’s GPUs. (This is likely overblown because companies still need GPUs to run models, not just to train them.)

- OpenAI subsequently accused DeepSeek of “inappropriately” using their data

- Turns out average chatbot users love to see the reasoning steps!

My favorite take so far was from Matt Levine who suggested DeepSeek’s business model could come from shorting its competitors every time it releases a cheaper, competitive model. Here at MoP we do not endorse short selling Nvidia, but we love to read hypothetical accounts about those who do.

That's all for now. As always, please send us your questions at machinesonpaper@gmail.com or comment below. (And a h/t to Matthew Scharf on my team who gave a great presentation on this topic at our reading group last week. Run reading groups, you'll learn a lot.)

0 Comments

Sign in or become a Machines on Paper member to join the conversation.

Just enter your email below to get a log in link.